On the journey of

[핸즈온머신러닝] Chapter 3(3.1~3.7) 본문

3.1 MNIST

💡 MNIST 데이터셋 (Modified National Institue of Standards and Technology Dataset) : 고등학생과 미국 인구조사국 직원들이 손으로 쓴 70,000개의 작은 숫자이미지를 모은 데이터셋

- 머신러닝 분야의 ‘Hello World’와 같은 학습용 데이터셋

- MNIST 데이터셋 가져오기

- ‘mnist_784’ : id

- version=1 : 하나의 id에 여러 개의 버전이 있음

- 출력값

- {'COL_NAMES':['label', 'data'], 'DESCR': ... 'data' : array([[0, 0, 0, ..., 0, 0, 0], [0, 0, 0, ..., 0, 0, 0], [0, 0, 0, ..., 0, 0, 0], ... [0, 0, 0, ..., 0, 0, 0], [0, 0, 0, ..., 0, 0, 0], [0, 0, 0, ..., 0, 0, 0]], dtype=uint8), 'target': array([0., 0., 0., ..., 9., 9., 9.]) }

- 딕셔너리 구조를 가짐.

- ‘DESCR’ : 데이터셋 설명

- from sklearn.datasets import fetch_openml mnist = fetch_openml('mnist_784', version=1, as_frame=False)

- MNIST 데이터셋은 크기가 커서 sklearn에 내장되어있지 않고 openml.org의 머신러닝 데이터 레포지터리에서 다운로드한다. 이를 도와주는 것이 sklearn의 fetch_openml

- data와 target 배열 살펴보기

X, y = mnist['data'], mnist['target']

X.shape # (70000, 784)

y.shape # (70000,)

- 이미지가 70,000개 있고 각 이미지에는 784(28*28)개의 특성이 있음.

- 각 특성은 0(흰색)부터 255(검은색)까지의 픽셀 강도

- MNIST 데이터 하나 살펴보기

%matplotlib inline

import matplotlib

import matplotlib.pyplot as plt

some_digit = X[0] # as_frame=False 아니면 KeyError

some_digit_image = some_digit.reshape(28, 28) # 재배열

plt.imshow(some_digit_image, cmap=matplotlib.cm.binary, interpolation="nearest")

plt.axis("off")

plt.show()

- y[0] 출력 결과는 ‘5’로 맞는 레이블이 되어있음을 알 수 있다.

- 테스트 세트의 설정

- 데이터를 분석하기 전에 항상 테스트 세트를 구분해놓아야 함

- MNIST 데이터셋은 이미 훈련 세트(앞쪽 60,000개 이미지)와 테스트 세트(뒤쪽 10,000개 이미지)로 나뉨

- 이미지가 랜덤하게 섞여있기때문에 순서대로 나눠도 무방

X_train, X_test, y_train, y_test = X[:60000], X[60000:], y[:60000], y[60000:] - 순서대로 나눈 것이 걱정된다면?

- 하나의 폴드에 특정 숫자가 누락된 경우,

- 비슷한 샘플이 연이어 나타나는 경우,

- 학습 알고리즘의 성능이 나빠짐

import numpy as np shuffle_index = np.random.permutation(60000) X_train, y_train = X_train[shuffle_index], y_train[shuffle_index]

3.2 이진분류기 훈련

<aside> 💡 이진분류기 (Binary Classifier) : 데이터를 양성 클래스와 음성 클래스 두 가지로 분류 : 기본에 MNIST 데이터셋을 0~9의 수로 분류하는 것은 다중분류의 예

</aside>

- 기존 MNIST 데이터셋 분류 문제를 단순화하여 ‘5’와 ‘5가 아닌 수’를 분류하는 이진분류기를 만들어보고자 함.

- 분류 작업을 위해 타깃 벡터를 만듦

y_train_5 = (y_train == 5) # 5라면 True로, 5가 아닌 다른 수라면 False로 저장

y_test_5 = (y_test == 5)

- 분류 모델 중 하나인 확률적 경사하강법(Stochastic Gradient Descent, SGD) 분류기 이용. (자세한 내용은 다음장에 나오므로 세부 설명 생략)

- sklearn의 SGDClassifier 클래스 이용

from sklearn.linear_model import SGDClassifier

sgd_clf = SGDClassifier(max_iter=5, random_state=42)

sgd_clf.fit(X_train, y_train_5)

- 모델을 사용하여 숫자 5의 이미지 감지

sgd_clf.predict([some_digit]) # array([True], dtype=bool), 특별하게 맞춘 케이스

3.3 성능 평가

- 분류기 평가를 위한 여러 성능 지표 비교

3.3.1 교차 검증을 사용한 정확도 측정

<aside> 💡 K-겹 교차 검증 (K-Fold Cross Validation) : 훈련세트를 K개의 폴드로 나누고, 각 폴드에 대해 예측을 만들고 평가하기 위해 나머지 폴드로 훈련시킨 모델을 사용

</aside>

- cross_val_score() 함수 이용 : 정확도(accuracy)

from sklearn.model_selection import cross_val_score

cross_val_score(sgd_clf, X_train, y_train_5, cv=3, scoring="accuracy") # K = 3

# array([0.9502, 0.96565, 0.96495])

- 모든 정확도가 95% 이상 → “좋은 결과인가?”

- 예시

- 단순 계산으로 전체 MNIST 데이터셋에 숫자 5의 이미지는 약 10% 정도라고 가정하면 무조건 5가 아니라고 예측한다면 90% 내외의 정확도를 얻을 수 있음

- 정확도는 특히 불균형한 데이터셋을 다룰 때 분류기의 성능 측정 지표로 선호되지 않는다.

- from sklearn.base import BaseEstimator class Never5Classifier(BaseEstimator): def fit(self, X, y=None): pass def predict(self, X): return np.zeros((len(X), 1), dtype=bool) never_5_clf = Never5Classifier() cross_val_score(never_5_clf, X_train, y_train_5, cv=3, scoring='accuracy') # array([0.909, 0.90715, 0.9128])

3.3.2 오차 행렬

💡 오차 행렬 (confusion matrix) : 실제로 참인지 거짓인지, 예측을 긍정으로 했는지, 부정으로 했는지에 따라 네 개의 경우의 수로 구분한 표

- 오차 행렬 만들기

- 우선 실제 타깃과 비교할 수 있도록 예측값을 만들어야 함 → cross_val_predict() 함수 이용 : 각 테스트 폴드에서 얻은 예측 반환

from sklearn.model_selection import cross_val_predict y_train_pred = cross_val_predict(sgd_clf, X_train, y_train_5, cv=3)- confusion_matrix() 함수 이용하여 오차 행렬 만듦.

예측 음성 예측 양성from sklearn.metrics import confusion_matrix confusion_matrix(y_train_5, y_train_pred) # array([[53272, 1307], # [1077, 4344]])실제 음성 진짜음성 (TN) 가짜양성 (FP) 실제 양성 가짜음성 (FN) 진짜양성 (TP) - 완벽한 분류기라면 FP = FN = 0

💡 정밀도 (precision) : 양성 예측의 정확도 : 양성클래스로 예측한 것 중 진짜양성의 비율

정밀도 = {TP}/{TP+FP}

💡 재현율 (recall) = 민감도 (sensitivity) = 진짜양성비율 (true positive rate; TPR) : 분류기가 정확하게 감지한 양성 샘플의 비율 : “실제 양성 중 진짜양성으로 얼마나 분류되었는가”

재현율 ={TP}/{TP+FN}

- ex)

3.3.3 정밀도와 재현율

- 파이썬에서는 정밀도와 재현율을 계산하는 함수를 제공

from sklearn.metrics import precision_score, recall_score

precision_score(y_train_5, y_train_pred) # == 4344 / (4344 + 1307)

# 0.768713...

recall_score(y_train_5, y_train_pred) # == 4344 / (4344 + 1077)

# 0.801328...

- 3.2.1의 정확도에 비해, 5로 판별된 이미지 중 77%만 정확하고 전체 숫자 5에서 80%만 감지한 사실은 성능이 좋아보이지 않음

💡 F1점수 (F1 score) : 정밀도와 재현율의 조화 평균(harmonic mean) : 두 분류기를 비교할 때 편리

- F1 점수 계산 시 f1_score() 함수 이용

from sklearn.metrics import fl_score

fl_score(y_train_5, y_train_pred)

# 0.78468...

- 정밀도와 재현율의 반비례 관계를 정밀도/재현율 트레이드오프라고 함.

3.3.4 정밀도/재현율 트레이드 오프

SGDClassifier의 분류를 통해 트레이드 오프 이해

이 분류기는 결정함수를 사용하여 각 샘플의 점수를 계산

- 샘플점수>임곗값→양성클래스

- 샘플점수<임곗값→음성클래스

5에 대한 train data를 사용할 때, 실제 양성 (# of (tp+fn)) = 6이므로 맨 오른쪽 임계값에 대해

정밀도 = 진짜 양성(# of 분류기 실제 5의 수 = 3 ) / 양성으로 예측( # of 분류기 감지 = 3) = 100%

재현율 = 진짜 양성(# of 분류기 실제 5의 수 = 3 ) / 실제 양성( # of 실제 5인 값 = 6) = 50%

임계값이 높아질 수록 정밀도 ↑ 재현율 ↓

임계값이 낮아질 수록 정밀도 ↓ 재현율 ↑

SGDClassifier의 특정 클래스(0~9중 1개)에 대한 예측값

y_scores = sgd_clf.decision_function([some_digit])

y_scores

>>array([303905.39584261])

# 임계점 = 0에 대한 예측값

threshold = 0

y_some_digit_pred = (y_scores>threshold)

y_some_digit_pred

>>array([ True]) #3를 잘 예측한 것을 알 수 있음

임계값 증가하면 재현율이 줄어듦

즉, 임계값이 0일땐, 분류기가 숫자 3를 감지했으나 임계값이 100000000로 높이면 이를 놓치게 됨

# 임계점: 0 → 100000000

threshold = 100000000

y_some_digit_pred = (y_scores > threshold)

y_some_digit_pred

>>array([False]) # 임계점을 높이니 3를 예측하지 못함

# 임계점 계산

y_scores = cross_val_predict(sgd_clf, X_train, y_train_3, cv = 3,

method = 'decision_function')

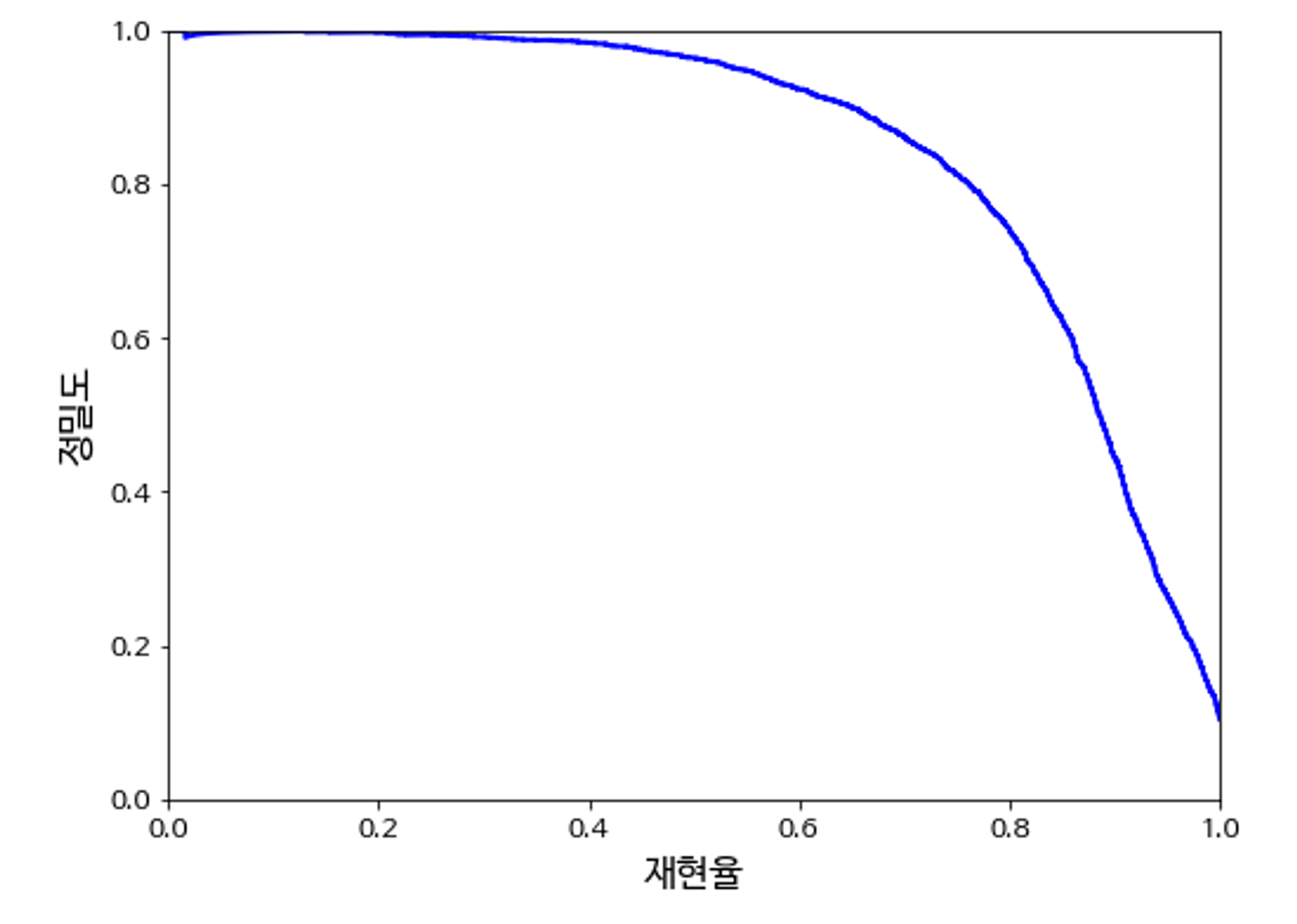

precision_recall_curve(): 모든 임계값에 대한 정밀도와 재현율 계산

from sklearn.metrics import precision_recall_curve

precisions, recalls, thresholds = precision_recall_curve(y_train_3, y_scores)

임계값에 대한 정밀도/재현율 함수

정밀도와 재현율의 트레이드오프 관계를 알 수 있음

정밀도는 높이되, 재현율을 어느정도 확보할 수 있는 적절한 임계점을 찾는 것이 중요

# 정밀도 90 목표로 세팅

y_train_pred_90 = (y_scores > 70000)

print(precision_score(y_train_3, y_train_pred_90)) #정밀도

>>0.8810385188337945

print(recall_score(y_train_3, y_train_pred_90)) #재현율

>>0.675256891208612

3.5 ROC 곡선

💡 reciver operating characteristic : 거짓양성비율(FPR)에 대한 진짜 양성비율(TPR, 재현율)

- FPR = 거짓양성/실제 음성

- TNR(특이도) = 진짜음성/실제 음성

ROC 곡선은 민감도(재현율,TPR)에 대한 1- 특이도(TNR)

from sklearn.metrics import roc_curve

# roc_curve를 이용해 여러 임곗값에서 TPR과 FPR 계산

fpr, tpr, thresholds = roc_curve(y_train_3, y_scores)아래 그래프를 보면 알 수 있듯,

재현율 ⇑ → 분류기가 만드는 거짓 양성 ⇑

곡선 아래의 면적(area under the curve:AUC) 완벽한 분류기는 ROC의 AUC가 1이고, 완전한 랜덤 분류기는 0.5

무작위로 예측할경우 오차 행렬의 실제 클래스가 비슷한 비율의 예측 클래스로 나뉘어 FPR과 TPR의 값이 비슷해짐.

결국 ROC 곡선이 y=x에 가깝게 되어 AUC 면적이 0.5가 됨.

SGDClassifier vs 랜덤포레스트 Classifier

#RandomForestClassifier

# decision_function()이 없으므로 predict_proba()이용

from sklearn.ensemble import RandomForestClassifier

forest_clf = RandomForestClassifier(n_estimators=10, random_state=42)

y_probas_forest = cross_val_predict(forest_clf, X_train, y_train_3, cv=3,

method="predict_proba")

#ROC 곡선을 그리려면 확률이 아닌 점수 필요

# 양성클래스의 확률을 점수로 활용

y_scores_forest = y_probas_forest[:, 1] #set 양성클래스 확률 = 점수

fpr_forest, tpr_forest, thresholds_forest = roc_curve(y_train_3,y_scores_forest)

# randomforestcalssfier >> SGDClassfier roc 그래프 그림

plt.figure(figsize=(8, 6))

plt.plot(fpr, tpr, "b:", linewidth=2, label="SGD")

plot_roc_curve(fpr_forest, tpr_forest, "랜덤 포레스트")

plt.legend(loc="lower right", fontsize=16)

plt.show()

# 랜덤포레스트 분류기의 score

from sklearn.metrics import roc_auc_score

roc_auc_score(y_train_3, y_scores_forest) # SGD값보다 향상 큼

>>0.98636272577489063.4 다중 분류

* 다중 분류기(다항 분류기): 둘 이상의 클래스를 구별

- 이진 분류기를 여러개 사용해 다중 클래스 분류 예) 0~9까지 훈련시켜 클래스가 10개인 숫자 이미지 분류

- OvA(일대다 전략): 시스템에서 이미지를 분류할 때 각 분류기의 결정 점수 중에서 가장 높은 것을 클래스로 선택

- OvO(일대일 전략): 토너먼트식으로 0과 1 구별, 0과 2 구별, 1과 2 구별 등과 같이 각 숫자의 조합마다 이진 분류기를 훈련

- OvO 계산양: 클래스 N개 → 분류기

- 장점: 각 분류기의 훈련에 전체 훈련 세트 중 구별할 두 클래스에 해당하는 샘플만 필요!!

대부분 이진분류: OvA선호

단 서포트벡터 머신같은 일부 알고리즘(=훈련 세트의 크기에 민감)은 OvO선호

SGD 다중 분류

다중 분류 방식: y_train이용해 여러 클래스 분류 작업

- 3을 구별한 타깃 클래스(y_train_3) 대신0~9까지의 원래 타깃 클래스(y_train)을 사용해 SGDClassifier을 숙련

- 그런다음 예측을 하나함-> 결과: 정확히 맞춤

- 사이킷런이 실제로 10개의 이진 분류기를 훈련시키고 각각의 결정 점수를 얻어 점수가 가장 높은 클래스를 선택

sgd_clf.fit(X_train,y_train) # y_train_3이 아닌 y_train 사용

sgd_clf.predict([some_digit])

>>array([3])다중 분류 방식: decision_function() 이용

- decision_function(): 사이킷런이 실제로 10개의 이진 분류기를 훈련시키고 각각의 결정 점수를 얻어 점수가 가장 높은 클래스를 선택가 맞는지 확인

- 샘플 하나의 점수가 아니라 클래스마다 하나씩, 총 10개의 점수를 반환

some_digit_scores = sgd_clf.decision_function([some_digit])

some_digit_scores # 가장 높은 값: 129738.57895132 -> index = 3임.

>>array([[-620766.3293308 , -303658.02060956, -420512.63999577,

129738.57895132, -612558.43668255, -181995.32698842,

-573231.01600869, -264848.38137796, -192137.27888861,

-272236.34656753]])그리고 SGD 분류기에 대한 추정치 & 실제 값

print('추정값:{}'.format(np.argmax(some_digit_scores))) # index가 3이 맞는지 체크

>>추정값:3

print('sgd가 분류한 mnist값:{}'.format(sgd_clf.classes_))

>>sgd가 분류한 mnist값:[0 1 2 3 4 5 6 7 8 9]

print('sgd가 분류한 minist 인덱스의 실제값:{}'.format(sgd_clf.classes_[3]))

>>sgd가 분류한 minist 인덱스의 실제값:3OvO나 OvA 강제 사용 방법

# OvO나 OvA 강제 사용

from sklearn.multiclass import OneVsOneClassifier

ovo_clf = OneVsOneClassifier(SGDClassifier(max_iter=5, random_state=42))

ovo_clf.fit(X_train, y_train)

ovo_clf.predict([some_digit])

print(ovo_clf.fit(X_train, y_train))

>>OneVsOneClassifier(estimator=SGDClassifier(max_iter=5, random_state=42))

print(ovo_clf.predict([some_digit]))

>>[3]

print(len(ovo_clf.estimators_))

>>45랜덤포레스트 다중 분류

print(forest_clf.fit(X_train, y_train))

>>RandomForestClassifier(n_estimators=10, random_state=42)

print(forest_clf.predict([some_digit]))

>>[3]랜덤포레스트분류기의 경우 직접 클래스를 분류하고 predict_proba()를 사용할 경우 각 분류의 확률이 계산됨

forest_clf.predict_proba([some_digit])

>>array([[0. , 0. , 0. , 0.9, 0. , 0. , 0. , 0. , 0. , 0.1]])분류기 성능 code

cross_val_score(sgd_clf, X_train, y_train, cv= 3, scoring = 'accuracy')

>>array([0.8639 , 0.82125, 0.8595 ])성능 향상: 입력 스케일을 표준화해 정확도를 높임.

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train.astype(np.float64))

cross_val_score(sgd_clf, X_train_scaled, y_train, cv = 3, scoring = 'accuracy')

>>array([0.90985, 0.9104 , 0.9097 ])3.5 에러 분석

1. 오차 행렬 분석 : 분류기의 성능 향상 방안에 대한 통찰을 얻을 수 있다.

- 일단 오차 행렬 만들어보기:

y_train_pred = cross_val_predict(sgd_clf, X_train_scaled, y_train, cv=3)

conf_mx = confusion_matrix(y_train, y_train_pred)

conf_mx

이때 오차 행렬을 이미지로 보고 싶다면:

plt.matshow(conf_mx, cmap=plt.cm.gray)

plt.show()

이 오차 행렬은 대부분의 이미지가 올바르게 분류되었음을 나타내는 주대각선에 위치하므로 매우 좋게 보인다. (배열에서 가장 큰 값은 흰색으로, 가장 작은 값은 검은색으로 나타남)

다만 숫자 5는 상대적으로 어두워 보이는데, 이는 1) 데이터셋에 숫자 5의 이미지가 적거나, 혹은 2) 분류기가 숫자 5를 다른 숫자보다 잘 분류하지 못한다는 뜻이다. 원인을 확인하기 위해 에러 비율을 비교해본다.

에러 비율 비교

row_sums = conf_mx.sum(axis=1, keepdims=True)

norm_conf_mx = conf_mx / row_s니ms

np.fill_diagonal(norm_conf_mx, 0) # 주대각선 0으로 채움

pit.matshow(norm_conf_mx, cmap=plt.cm.gray)

plt.show ()

행은 실제 클래스, 열은 예측한 클래스를 나타낸다.

위 이미지에서 밝은 열(특히 8, 9열)은 많은 이미지가 해당 숫자로 잘못 예측되었음을, 밝은 행(특히 8, 9행)은 해당 숫자가 다른 숫자들과 혼돈이 자주 된다는 것을 의미한다.

- 오차 행렬 분석:

위 그래프를 분석하면 분류기의 성능 향상 방안에 대한 통찰을 얻을 수 있다. 가장 눈에 띄는 밝은 부분들을 분석해보면 아래와 같은 **개선점(문제점)**을 찾을 수 있다.

- 3과 5가 서로 혼돈되는 경우가 많다.

- 분류기가 8, 9를 잘 분류하지 못한다.

이에 대한 개선방안으로는:

- 훈련 데이터를 더 모은다.

- 분류기에 도움 될 만한 특성을 더 찾아본다.

- 어떤 패턴이 드러나도록 (Scikit-Image, Pillow, OpenCV 등을 사용해서) 이미지를 전처리한다.

2. 개개의 에러 분석 : 분류기가 무슨 일을 하고 있고, 왜 잘못되었는지에 대해 통찰을 얻을 수 있다.

단, 더 어렵고 시간이 오래 걸린다. 예시로 3과 5의 샘플을 살펴본다.

- 3과 5의 샘플 그리기:

cl_a, cl_b = 3, 5

X_aa = X_train[(y_train == cl_a) & (y_train_pred == cl_a)]

X_ab = X_train[(y_train == cl_a) & (y_train_pred == cl_b)]

X_ba = X_train[(y_train == cl_b) & (y_train_pred == cl_a)]

X_bb = X_train[(y_train == cl_b) & (y_train_pred == cl_b)]

p it.figure(figsize=(8,8))

plt.subplot(221); plot_digits(X_aa[:25], images_per_row=5)

plt.subplot(222); plot_digits(X_ab[:25], images_per_row=5)

plt.subplot(223); plot_digits(X_ba[:25], images_per_row=5)

plt.subplot(224); plot_digits(X_bb[:25], images_per_row=5)

plt.show()

왼쪽의 블록 두 개는 3으로, 오른쪽 블록 두 개는 5로 분류된 이미지이다. 왼쪽 아래 블록, 오른쪽 위 블록은 잘못 분류된 이미지로 분류기가 실수한 것이다.

- 분석:

분류기가 실수한 원인은 선형 모델인 SGDClassifier를 사용했기 때문이다.

선형 분류기는 클래스마다 픽셀에 가중치를 할당하고 새로운 이미지에 대해 단순히 픽셀 강도의 가중치 합을 클래스의 점수로 계산한다. 이때 3과 5는 몇 개의 픽셀만 다르기 때문에 모델이 쉽게 혼동하게 되는 것이다.

해결 방법으로는 이미지를 중앙에 위치시키고 회전되어 있지 않도록 전처리하는 것을 들 수 있다. 분류기는 이미지의 위치나 회전 방향에 매우 민감하기 때문이다.

3.6 다중 레이블 분류

1. 다중 레이블 분류란?

다중 레이블 분류란 여러 개의 이진 레이블(클래스)을 출력하는 분류 시스템을 말한다. 한 개의 샘플에 여러 개의 이진 레이블이 할당되는 것이다.

2. 예시

한 개의 숫자 이미지에 대해 1)큰 값(7, 8, 9)인지, 2) 홀수인지 여부를 True, False의 이진 레이블로써 판별해주는 모델을 훈련시키고 예측을 만들어본 후 성능을 평가해본다.

#모델 훈련 및 예측

from sklearn.neighbors import KNeighborsClassifier

y_train_large = (y_train >= 7)

y_train_odd = (y_train % 2 == 1)

y_multilabel = np.c_[y_train_large, y_train_odd]

knn_crf = KNeighborsClassifier()

knn_clf.fit(X_train, y_multilabel)

# 예측 만들기

knn_clf.predict([some_digit])

# 출력값(숫자 5를 입력한 경우): array([[False, True]], dtype=bool)- 모델 평가 : 다중 레이블 분류기를 평가하는 적절한 지표는 프로젝트에 따라 다르다. 이 모델의 경우에는 모든 레이블에 대한 F1 점수의 평균을 계산하는 방법을 사용해본다.

y_train_knn_pred = cross_val_predict(knn_clf, X_train, y_multilabel, cv=3,

n_jobs=-1)

f1_score(y_multilabel, y_train_knn_pred, average="macro")

# 출력값: 0.97709078477525위 코드는 모든 레이블의 가중치가 같다고 가정하였으므로 f1_score의 파라미터 average=”macro” 로 설정하였다.

각 레이블의 가중치에 차이를 두고 싶다면 average=”weighted”로 설정하면 된다. 이는 레이블에 클래스의 지지도(타깃 레이블에 속한 샘플 수)를 가중치로 주는 것을 의미한다.

3.7 다중 출력 분류

1. 다중 출력 분류란?

다중 출력 분류, 혹은 다중 출력 다중 클래스 분류란 다중 레이블 분류에서 한 레이블이 다중 클래스가 될 수 있도록 일반화한 것이다. 즉 여러 개의 다중 레이블을 출력할 수 있다.

2. 예시

이미지에서 노이즈를 제거하는 시스템을 만들어본다. 한 개의 입력(노이즈가 많은 숫자 이미지)은 여러 개의 레이블(여러 개의 픽셀)에 대해 여러 개의 값(0부터 255까지 펙셀 강도)을 가진다.

먼저 MNIST 이미지에서 추출한 훈련 세트와 테스트 세트에 노이즈를 추가한다. 타깃 이미지는 원본 이미지가 된다.

noise = rnd.randint(0, 100, (len(X_train), 784)) # [0, 100)의 범위에서 (len(X_train), 784) 형태의 임의의 정수 배열 생성

X_train_mod = X_train + noise

noise = rnd.randint(0, 100, (len(X_test), 784))

X_test_mod = X_test + noise

y_train_mod = X_train

y_test_mod = X_test테스트 세트에서 이미지를 하나 선택하고, 분류기를 훈련시켜 이 이미지를 깨끗하게 만들어 본다. 몰랐지 생성형 모델을 공부하면서 다시 이 MNIST를 만나게 될 거라고는

knn_clf.fit(X_train_mod, y_train_mod)

clean_digit = knn_clf.predict([X_test_mod[some_index]])

plot_digit(clean_digit)

타깃과 매우 비슷하게 깨끗한 이미지로 잘 출력된 것을 확인할 수 있다.

'Experiences & Study' 카테고리의 다른 글

| [Paper]Unsupervised Anomaly Detection with Generative Adversarial Networks to Guide Marker Discovery (1) | 2023.08.29 |

|---|---|

| [이브와] 가상환경(Virtual Box) 포팅,한글 폰트 설치 후 사용하기 (0) | 2023.08.14 |

| [이브와] 윈도우 OS에서 라즈베리 파이 화면연동하기 (1) | 2023.07.26 |

| [사조사 실기] 메모 (0) | 2023.07.02 |

| [이브와] 프로그레시브 웹 앱(PWA)란? (0) | 2023.06.24 |